🚀 Augmented Reality Advanced: the Next Layer of Our Digital World

Introduction

Imagine looking at a city street and instantly seeing the history of each building, a 3‑D model of a dinosaur walking beside you, or real‑time data about the air you breathe—all without picking up a tablet. That’s Augmented Reality (AR), a technology that blends computer‑generated content with the physical world. While the basics of AR (think Pokémon GO) are now familiar, the advanced side pushes the boundaries of perception, interaction, and even ethics. This guide dives deep into the science, the societal ripple effects, and the career paths waiting for the next generation of innovators.

1. Foundations of Augmented Reality: from Pixels to Perception

| Component | What It Does | Key Terms |

|---|---|---|

| Display Hardware | Projects digital overlays onto the eyes or a screen. | Head‑Mounted Display (HMD), Waveguide optics, Retinal projection |

| Sensors & Actuators | Capture motion, depth, and environmental data. | Inertial Measurement Unit (IMU), LiDAR, Time‑of‑Flight (ToF) cameras |

| Software Stack | Interprets sensor input, renders graphics, and syncs with the real world. | Rendering pipeline, SDK (e.g., ARCore, ARKit), Middleware |

| Connectivity | Streams data and updates in real time. | 5G, Edge computing, Low‑latency networking |

Why It Matters:

- Spatial Fidelity – The tighter the alignment between virtual and physical objects, the more convincing the experience.

- Latency – Even a 20‑millisecond delay can cause motion sickness; engineers strive for sub‑10 ms response times.

2. Advanced AR Techniques: Mapping, Intelligence, and Interaction

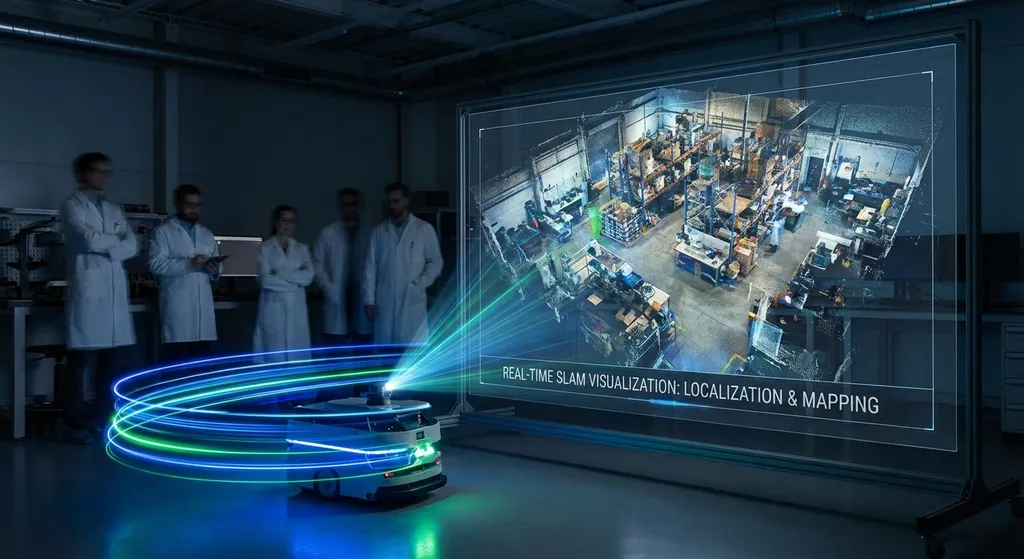

A. Simultaneous Localization and Mapping (slam)

SLAM algorithms let a device understand its environment while moving through it. By fusing IMU data with visual cues, the system builds a Sparse Point Cloud and continuously updates its pose estimate.

Evidence: A 2022 IEEE study showed that modern visual‑inertial SLAM can achieve positional errors under 1 cm in indoor settings, a tenfold improvement over 2015 benchmarks.

B. Occlusion & Depth Perception

Advanced AR renders objects Behind real‑world items, requiring accurate depth maps. Techniques include:

- Depth‑aware Compositing using LiDAR or structured light.

- Neural Radiance Fields (NeRFs) that synthesize photorealistic 3‑D scenes from sparse images.

C. Ai‑driven Contextualization

Machine learning models now interpret semantic information (e.g., “this is a plant”). This enables:

- Dynamic Content Adaptation – a biology app can replace a real leaf with a virtual explainer of photosynthesis.

- Natural Language Interaction – voice commands trigger contextual overlays without touching the device.

D. Multi‑user Collaboration

Through cloud‑synchronized spatial anchors, several users can share the same AR experience, seeing each other’s virtual objects in real time. This is the backbone of Remote Assistance and Virtual Classrooms.

3. Societal Impact & Ethical Perspectives

| Domain | Positive Potential | Challenges & Concerns |

|---|---|---|

| Education | Immersive labs (e.g., virtual chemistry reactions) boost engagement and retention. | Over‑reliance on screens may reduce hands‑on experimentation. |

| Healthcare | Surgeons use AR overlays for precision guidance; patients visualize anatomy for informed consent. | Data privacy of biometric scans; risk of inaccurate overlays causing errors. |

| Urban Planning | Citizens can preview zoning changes in situ, fostering participatory design. | Digital divide – not everyone can afford AR‑capable devices. |

| Entertainment | Hyper‑realistic gaming and storytelling create new art forms. | Intellectual property infringement when virtual objects replicate copyrighted works. |

Multiple Perspectives:

- Technologists argue that AR democratizes information, turning any surface into an interactive canvas.

- Ethicists caution about “augmented bias,” where algorithms amplify existing societal inequities through selective content.

- Economists project the AR market to exceed $340 Billion by 2030, reshaping labor demand across sectors.

4. Careers & Future Pathways in Advanced AR

| Role | Core Skills | Typical Projects |

|---|---|---|

| AR Software Engineer | C++, Unity/Unreal, computer vision, shader programming | Building low‑latency rendering pipelines for HMDs |

| Computer Vision Scientist | Deep learning, SLAM, Python/Matlab | Developing next‑gen depth‑sensing algorithms |

| Interaction Designer (UX/UI) | Human‑centered design, prototyping, ergonomics | Crafting intuitive gesture vocabularies |

| Hardware Engineer | Optics, PCB design, sensor integration | Designing lightweight waveguide displays |

| Ethics & Policy Analyst | Law, philosophy, data governance | Drafting guidelines for responsible AR deployment |

Tip For Students:

Start by learning Unity or Unreal Engine, experiment with ARKit (iOS) or ARCore (Android), and explore open‑source SLAM libraries like ORB‑SLAM2. Building a small prototype now can be the first step toward a future career in this fast‑growing field.

Simple Activity: DIY AR Scavenger Hunt 📱

- Download a free AR app (e.g., Google Lens or Microsoft Lens).

- Create a list of 5 everyday objects (a coffee mug, a poster, a plant, etc.).

- Scan each object with the app and capture the AR Overlay it generates (text, 3‑D model, or animation).

- Document the experience: note latency, accuracy of overlay, and any surprising information.

- Reflect: How did the AR content change your perception of the object? Could this be useful in a classroom, a museum, or a workplace? Write a short paragraph summarizing your thoughts.

Quick Quiz

Test your understanding of the concepts covered. Write down your answers, then scroll down to the answer key.

- What Does SLAM Stand For, And Why Is It Crucial For Mobile AR Experiences?

- Name two hardware technologies that enable accurate depth perception in AR.

- List one ethical concern associated with widespread AR adoption and a possible mitigation strategy.

- Which Programming Environments Are Most Commonly Used For Creating AR Applications?

- Explain how multi‑user collaboration is achieved in advanced AR systems.

Answer Key

- Simultaneous Localization And Mapping – it lets a device map its surroundings while tracking its own position, ensuring virtual objects stay correctly anchored as the user moves.

- Examples: LiDAR sensors and Time‑of‑Flight (ToF) Cameras (other acceptable answers: structured light projectors and depth cameras).